|

|

|

| Seismic data decomposition into spectral components using regularized nonstationary autoregression |  |

![[pdf]](icons/pdf.png) |

Next: Autoregressive spectral analysis

Up: Fomel: Regularized nonstationary autoregression

Previous: Introduction

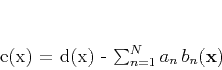

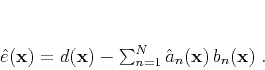

Regularized nonstationary regression (Fomel, 2009) is based on the

following simple model. Let  represent the data as a

function of data coordinates

represent the data as a

function of data coordinates  , and

, and

,

,

, represent a collection of basis functions. The goal

of stationary regression is to estimate coefficients

, represent a collection of basis functions. The goal

of stationary regression is to estimate coefficients  ,

,

such that the prediction error

such that the prediction error

|

(1) |

is minimized in the least-squares sense. In the case of regularized

nonstationary regression (RNR), the coefficients become

variable,

|

(2) |

The problem in this case is underdetermined but can be constrained by regularization (Engl et al., 1996). I use

shaping

regularization (Fomel, 2007) to implement an explicit control on the resolution and variability of regression coefficients.

Shaping regularization applied to RNR

amounts to linear inversion,

|

(3) |

where  is a vector composed of

is a vector composed of

,

the elements of vector

,

the elements of vector  are

are

![\begin{displaymath}

c_i(\mathbf{x}) = \mathbf{S}\left[b_i^{*}(\mathbf{x})\,d(\mathbf{x})\right]\;,

\end{displaymath}](img12.png) |

(4) |

the elements of matrix  are

are

![\begin{displaymath}

M_{ij}(\mathbf{x}) = \lambda^2\,\delta_{ij} +

\mathbf{S...

...thbf{x})\,b_j(\mathbf{x}) -

\lambda^2\,\delta_{ij}\right]\;,

\end{displaymath}](img14.png) |

(5) |

is a scaling coefficient, and

is a scaling coefficient, and  represents a

shaping (typically smoothing) operator. When inversion in

equation 3 is implemented by an iterative method, such

as conjugate gradients, strong smoothing makes

represents a

shaping (typically smoothing) operator. When inversion in

equation 3 is implemented by an iterative method, such

as conjugate gradients, strong smoothing makes

close to identity and easier (taking less iterations) to

invert, whereas weaker smoothing slows down the inversion but allows for

more details in the solution. This intuitively logical behavior

distinguishes shaping regularization from alternative methods (Fomel, 2009).

close to identity and easier (taking less iterations) to

invert, whereas weaker smoothing slows down the inversion but allows for

more details in the solution. This intuitively logical behavior

distinguishes shaping regularization from alternative methods (Fomel, 2009).

Regularized nonstationary autoregression (RNAR) corresponds to the

case of basis functions being causal translations of the input data

itself. In 1D, with  , this condition implies

, this condition implies

.

.

|

|

|

| Seismic data decomposition into spectral components using regularized nonstationary autoregression |  |

![[pdf]](icons/pdf.png) |

Next: Autoregressive spectral analysis

Up: Fomel: Regularized nonstationary autoregression

Previous: Introduction

2013-10-09

![]() represent the data as a

function of data coordinates

represent the data as a

function of data coordinates ![]() , and

, and

![]() ,

,

![]() , represent a collection of basis functions. The goal

of stationary regression is to estimate coefficients

, represent a collection of basis functions. The goal

of stationary regression is to estimate coefficients ![]() ,

,

![]() such that the prediction error

such that the prediction error

![]() , this condition implies

, this condition implies

![]() .

.