Next: Solution

Up: Fomel: Forward interpolation

Previous: Interpolation theory

A particular form of the solution (1) arises from

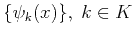

assuming the existence of a basis function set

, such that the function

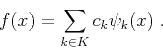

, such that the function  can be represented by a linear

combination of the basis functions in the set, as follows:

can be represented by a linear

combination of the basis functions in the set, as follows:

|

(8) |

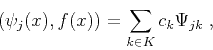

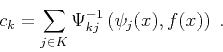

We can find the linear coefficients  by multiplying both

sides of equation (8) by one of the basis functions

(e.g.

by multiplying both

sides of equation (8) by one of the basis functions

(e.g.  ). Inverting the equality

). Inverting the equality

|

(9) |

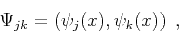

where the parentheses denote the dot product, and

|

(10) |

leads to the following explicit expression for the coefficients

:

:

|

(11) |

Here

refers to the

refers to the  component of the matrix,

which is the inverse of

component of the matrix,

which is the inverse of  . The matrix

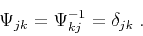

. The matrix  is invertible as

long as the basis set of functions is linearly independent. In the

special case of an orthonormal basis,

is invertible as

long as the basis set of functions is linearly independent. In the

special case of an orthonormal basis,  reduces to the identity

matrix:

reduces to the identity

matrix:

|

(12) |

Equation (11) is a least-squares estimate of the coefficients

: one can alternatively derive it by minimizing the least-squares

norm of the difference between

: one can alternatively derive it by minimizing the least-squares

norm of the difference between  and the linear

decomposition (8). For a given set of basis functions,

equation (11) approximates the function

and the linear

decomposition (8). For a given set of basis functions,

equation (11) approximates the function  in formula

(1) in the least-squares sense.

in formula

(1) in the least-squares sense.

Next: Solution

Up: Fomel: Forward interpolation

Previous: Interpolation theory

2014-02-21

![]() : one can alternatively derive it by minimizing the least-squares

norm of the difference between

: one can alternatively derive it by minimizing the least-squares

norm of the difference between ![]() and the linear

decomposition (8). For a given set of basis functions,

equation (11) approximates the function

and the linear

decomposition (8). For a given set of basis functions,

equation (11) approximates the function ![]() in formula

(1) in the least-squares sense.

in formula

(1) in the least-squares sense.