|

|

|

|

CuQ-RTM: A CUDA-based code package for stable and efficient Q-compensated reverse time migration |

Next: Examples Up: Architecture of the cu-RTM Previous: Module

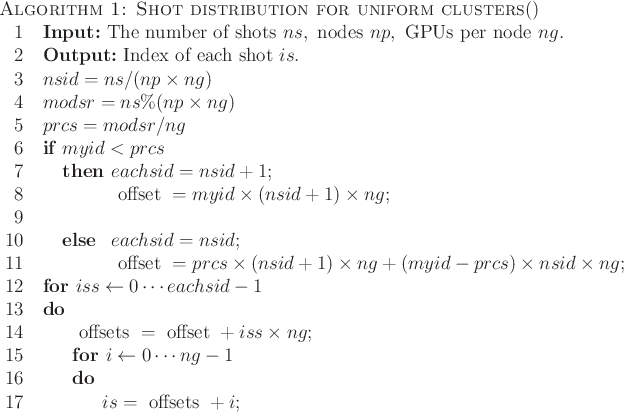

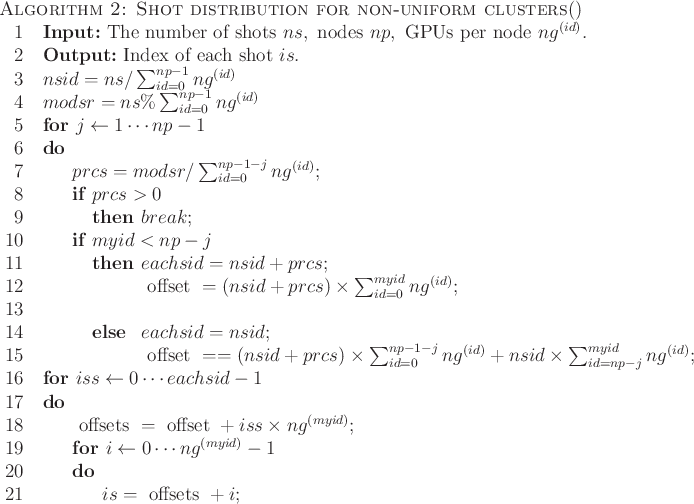

The code package described above is implemented for multiple NVIDIA GPUs using MPI, C, and CUDA in an MLP fashion. The execution is divided into two components. The first is responsible for the coarse-grained parallelization between nodes of the clusters, which is parallelized using MPI. The second performs calculations within each GPU using CUDA. One of the most important issues arising when working with a hybrid MPI/CUDA code is the proper mapping of MPI processes and threads to GPUs and nodes. Thus, we can evenly distribute all shots among every node and every GPU, while being aware of the precise index of each shot during simulation. In the package, we provide two distributing schemes to allow the framework to run on both uniform clusters (i.e., each node with the same number of integrated GPUs) and non-uniform clusters (i.e., a mixture of nodes with the different number and the types of integrated GPUs). Every MPI process (rank) MPI_Comm_rank(comm,&myid) inspects the configuration of the node being executed on, and all GPUs of each node are launched by streaming execution. Algorithms 1 and 2 provide shot distributing schemes to ensure load balancing on each node and device. All threads on host are synchronized by MPI_Barrier(comm) before migrated images from all shots are reduced by MPI_Allreduce(![]() ), which further guarantees less thread blocking time.

), which further guarantees less thread blocking time.